2025

Lua-LLM: Learning Unstructured-Sparsity Allocation for Large Language Models

Mingge Lu, Jingwei Sun, Junqing Lin, Zechun Zhou, Guangzhong Sun

Advances in Neural Information Processing Systems (NeurIPS) 2025

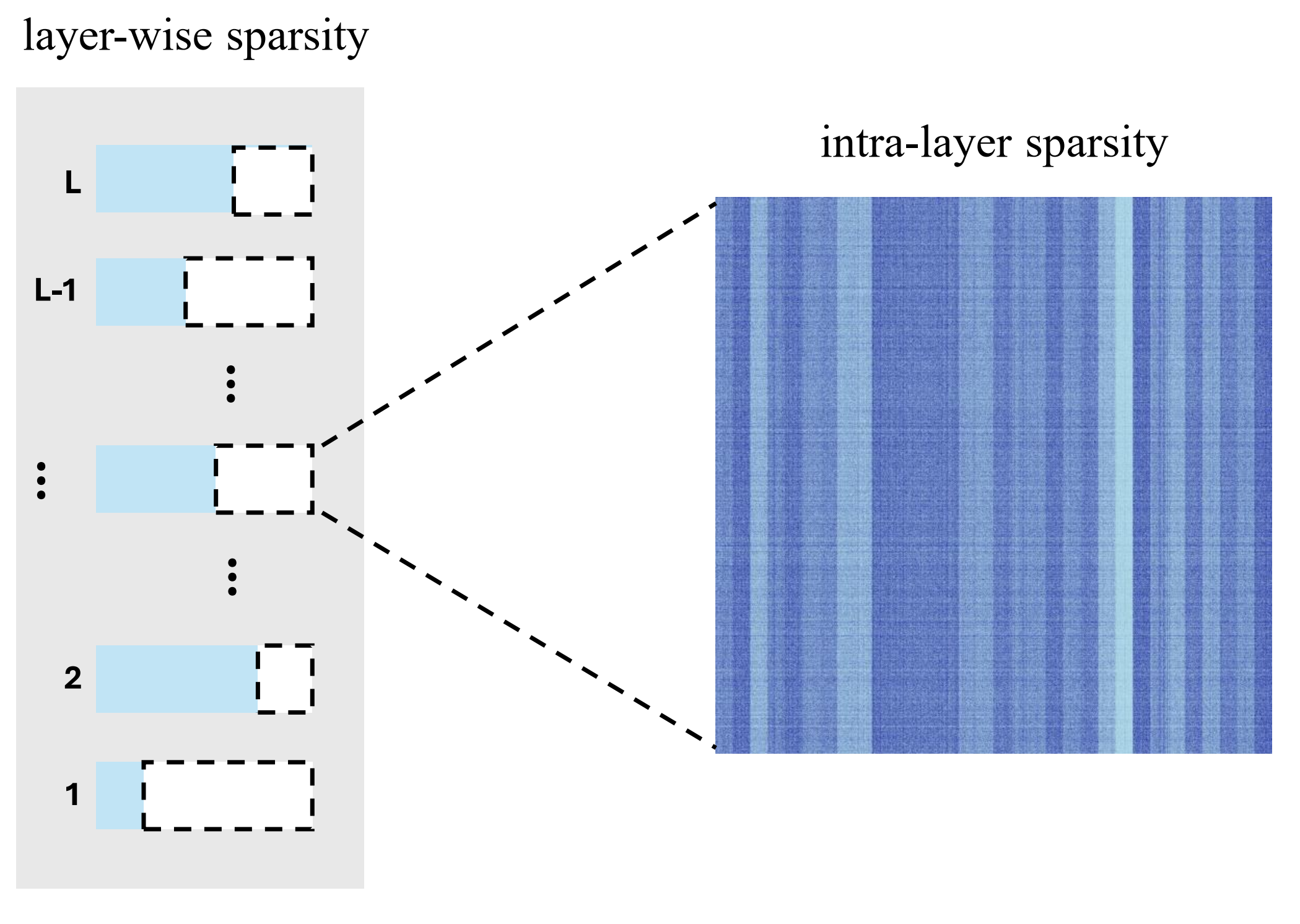

We propose Lua-LLM (Learning unstructured-sparsity allocation in LLMs), a learning-based global pruning framework that explores the optimal unstructured sparsity allocation. Unlike existing pruning methods, which primarily focus on allocating per-layer sparsity, Lua-LLM achieves flexible allocation for both layer-wise and intra-layer sparsity.

Lua-LLM: Learning Unstructured-Sparsity Allocation for Large Language Models

Mingge Lu, Jingwei Sun, Junqing Lin, Zechun Zhou, Guangzhong Sun

Advances in Neural Information Processing Systems (NeurIPS) 2025

We propose Lua-LLM (Learning unstructured-sparsity allocation in LLMs), a learning-based global pruning framework that explores the optimal unstructured sparsity allocation. Unlike existing pruning methods, which primarily focus on allocating per-layer sparsity, Lua-LLM achieves flexible allocation for both layer-wise and intra-layer sparsity.

Toward Efficient SpMV in Sparse LLMs via Block Extraction and Compressed Storage

Junqing Lin, Jingwei Sun, Mingge Lu, Guangzhong Sun

arXiv:2507.12205

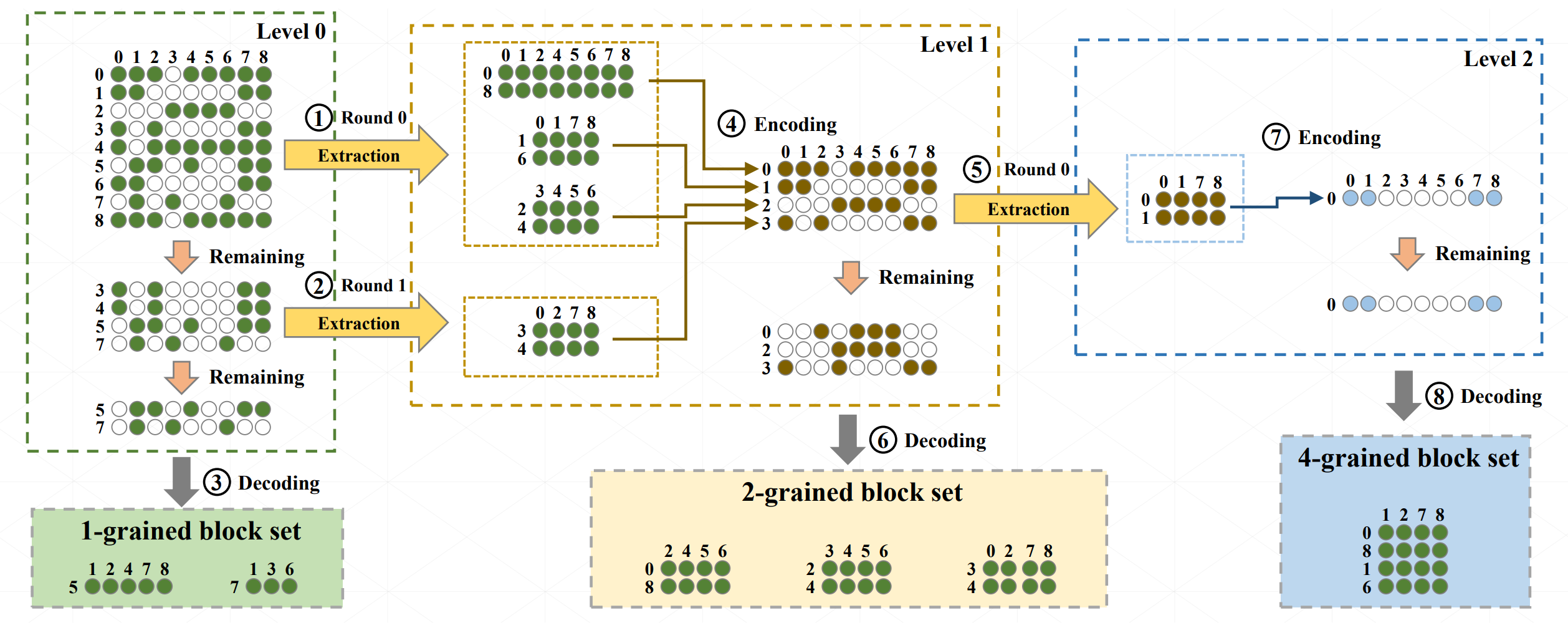

This paper presents EC-SpMV, a GPU-optimized SpMV approach for accelerating sparse LLM inference. EC-SpMV introduces (1) a hierarchical block extraction algorithm that captures multiple granularities of block structures within sparse LLMs, and (2) a novel compressed sparse format (EC-CSR) that employs delta indexing to reduce storage overhead and enhance memory access efficiency.

Toward Efficient SpMV in Sparse LLMs via Block Extraction and Compressed Storage

Junqing Lin, Jingwei Sun, Mingge Lu, Guangzhong Sun

arXiv:2507.12205

This paper presents EC-SpMV, a GPU-optimized SpMV approach for accelerating sparse LLM inference. EC-SpMV introduces (1) a hierarchical block extraction algorithm that captures multiple granularities of block structures within sparse LLMs, and (2) a novel compressed sparse format (EC-CSR) that employs delta indexing to reduce storage overhead and enhance memory access efficiency.