Master Student in CS @ USTC

Master Student in CS @ USTC B.Eng. in AI, School of the Gifted Young @ USTC

B.Eng. in AI, School of the Gifted Young @ USTCMy research interests lie broadly in designing efficient machine learning systems, including model compression algorithms (sparsity, quantization, neural architecture search) and co-designed GPU kernels.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

University of Science and Technology of ChinaMaster Student, School of Computer Science and TechnologySep. 2024 - present

University of Science and Technology of ChinaMaster Student, School of Computer Science and TechnologySep. 2024 - present -

University of Science and Technology of ChinaB.Eng. in Artificial Intelligence (Honor), School of the Gifted YoungSep. 2020 - Jul. 2024

University of Science and Technology of ChinaB.Eng. in Artificial Intelligence (Honor), School of the Gifted YoungSep. 2020 - Jul. 2024

News

Selected Publications (view all )

Lua-LLM: Learning Unstructured-Sparsity Allocation for Large Language Models

Mingge Lu, Jingwei Sun, Junqing Lin, Zechun Zhou, Guangzhong Sun

Advances in Neural Information Processing Systems (NeurIPS) 2025

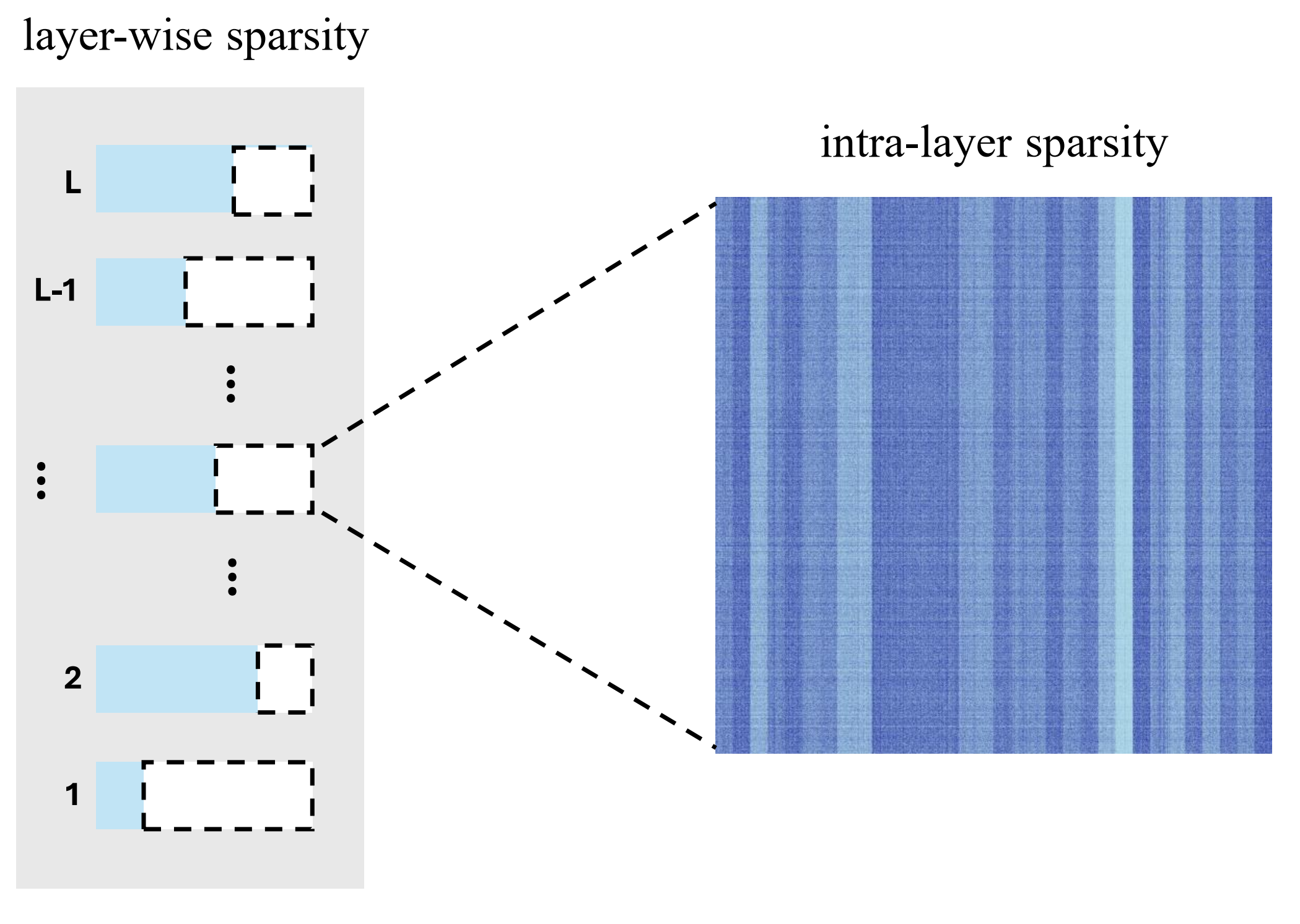

We propose Lua-LLM (Learning unstructured-sparsity allocation in LLMs), a learning-based global pruning framework that explores the optimal unstructured sparsity allocation. Unlike existing pruning methods, which primarily focus on allocating per-layer sparsity, Lua-LLM achieves flexible allocation for both layer-wise and intra-layer sparsity.

Lua-LLM: Learning Unstructured-Sparsity Allocation for Large Language Models

Mingge Lu, Jingwei Sun, Junqing Lin, Zechun Zhou, Guangzhong Sun

Advances in Neural Information Processing Systems (NeurIPS) 2025

We propose Lua-LLM (Learning unstructured-sparsity allocation in LLMs), a learning-based global pruning framework that explores the optimal unstructured sparsity allocation. Unlike existing pruning methods, which primarily focus on allocating per-layer sparsity, Lua-LLM achieves flexible allocation for both layer-wise and intra-layer sparsity.

Toward Efficient SpMV in Sparse LLMs via Block Extraction and Compressed Storage

Junqing Lin, Jingwei Sun, Mingge Lu, Guangzhong Sun

arXiv:2507.12205

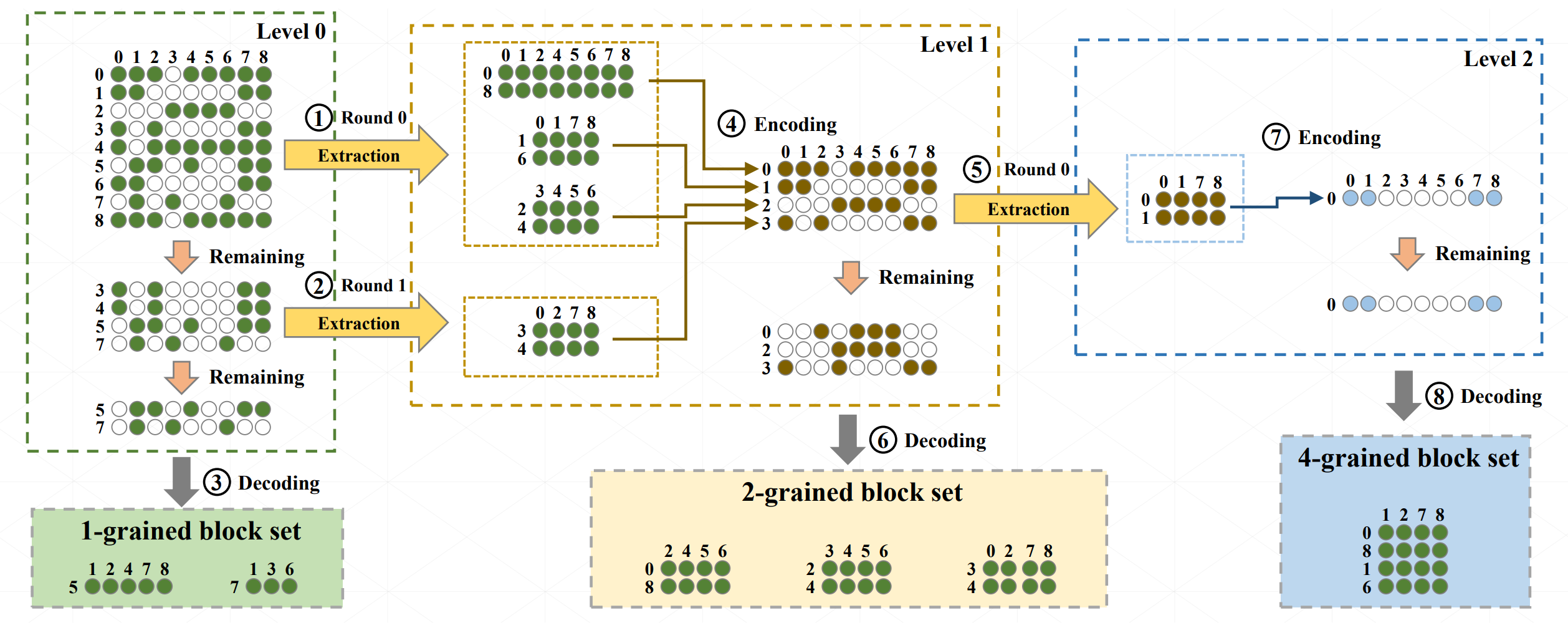

This paper presents EC-SpMV, a GPU-optimized SpMV approach for accelerating sparse LLM inference. EC-SpMV introduces (1) a hierarchical block extraction algorithm that captures multiple granularities of block structures within sparse LLMs, and (2) a novel compressed sparse format (EC-CSR) that employs delta indexing to reduce storage overhead and enhance memory access efficiency.

Toward Efficient SpMV in Sparse LLMs via Block Extraction and Compressed Storage

Junqing Lin, Jingwei Sun, Mingge Lu, Guangzhong Sun

arXiv:2507.12205

This paper presents EC-SpMV, a GPU-optimized SpMV approach for accelerating sparse LLM inference. EC-SpMV introduces (1) a hierarchical block extraction algorithm that captures multiple granularities of block structures within sparse LLMs, and (2) a novel compressed sparse format (EC-CSR) that employs delta indexing to reduce storage overhead and enhance memory access efficiency.